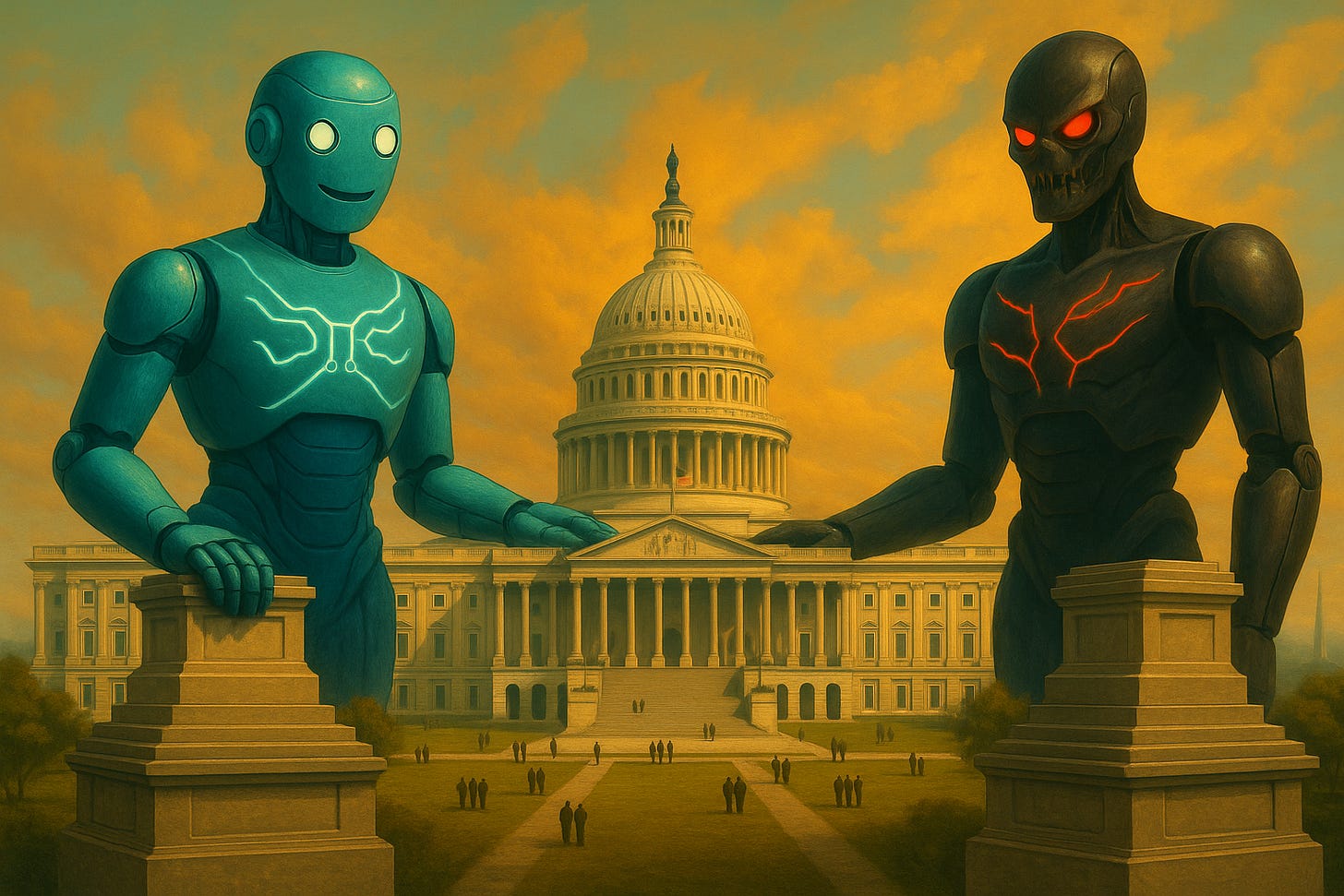

rule30 #2: AGI Goes to Washington

Senators reject regulation ban, alignment is still tough, and AI slop goes mainstream

It’s the second outing of the new season of Moneyball. I’m Harry Law, a former researcher at Google DeepMind who writes Learning From Examples – a newsletter about the history of AI and its future. Each month, I work with the team at rule30 to help make sense of what’s been happening in AI over the last four weeks. Then I bundle it together, wrap it tightly, and send it on to our dear readers.

Last time we wrote about Meta’s new plans for its AI unit. Since then Zuck has confirmed that ‘Meta Superintelligence Labs’ will be headed by Alexandr Wang and GitHub’s Nat Friedman.

Several big hitters from OpenAI, Google DeepMind, and Anthropic have joined the team, no doubt lured by the prospect of building an Abraham Lincoln chatbot rather than an alleged $100M signing fee.

The cash seems ludicrous at first blush, but it makes a kind of sense if you believe the current LLM paradigm can get us all the way to human-level AI.

That goes for politicians as much as it does developers. As UK technology minister Peter Kyle put it: “By the end of this parliament we're going to be knocking on artificial general intelligence.” And in Washington, as the upper house moved to allow states to regulate AI, Representative Raja Krishnamoorthi announced he is working on an AGI Safety Act.

Confirming that we are truly living in a sci-fi timeline, Representative Jill Tokuda asked: “Should we also be concerned that authoritarian states like China or Russia may lose control over their own advanced systems?” Others, including the mighty RAND Corporation, are wondering the same thing. The think-tank outlined eight scenarios for a world with AGI, including those that end in American hegemony, Chinese dominance, and, uh, an ‘AGI coup’ where the systems run the show.

This last scenario is what happens when we get alignment badly wrong, which we can think of as ‘getting AI to do what we really want’.

One part of this idea is ‘value alignment’, which deals with whether an AI’s actions are ethically appropriate. Recent work suggests that values in current LLMs “exhibit high degrees of structural coherence” or in other words that models seem to converge on a similar set of values.

Then again, it’s not really clear how deep these preferences go. New work argues that models don’t actually have a sense of stable preferences because responses tend to be highly sensitive to prompting.

The upshot is that alignment remains a tough old beast. As the folks at Anthropic like to remind us, even if your model looks friendly, it might just be faking it. This is clearly bad if you start deploying AI in places where it could cause some damage.

And of course, you are more likely to miss any deception when you think you are in a race. This is the concern a recent paper raises in the context of US-China rivalry, but it’s also the idea that animates the AI 2027 forecasting project from April.

In the world of capabilities, a few interesting papers suggest that the large model project is both predictable and remarkable.

A paper in Nature Machine Intelligence found multimodal LLMs can sort hundreds of everyday objects into a conceptual map that mirrors how people naturally group things, even matching activity in brain areas for faces, places and bodies.

Another post argued that the story of modern deep learning is basically entirely about data. In 2012 AlexNet unlocked the ImageNet dataset; in 2017 transformers unlocked the internet as text; in 2022 RLHF unlocked learning from humans; and in 2024 reasoning unlocked learning from verifiers.

Some work on self-updating language models was wildly misinterpreted on X dot com, but does suggest a promising path towards learning in the real world. This is the broad idea behind Google DeepMind’s ‘Era of Experience’ thesis, which I take to be directionally correct.

These questions are hugely relevant to the future of the AI project, but not necessarily for how people use the technology today.

One recent survey finds that workers using AI report 3x productivity gains on 1/5 of tasks, while another reports that teachers using AI to save six hours a week.

The latter comes as OpenAI announces the launch of the US National Academy for AI Instruction, a five-year initiative to help 400,000 teachers to use large models to “lead the way in shaping how AI is used and taught in classrooms across the country.”

And Microsoft, which also wanted to remind people it still has an independent AI group, said it had taken “a big step towards medical superintelligence” with a new model capable of solving open-ended medical queries.

Doctors and teachers are one thing, but what about the unsung heroes: musicians and movie producers?

Well, in the past few weeks, an AI band called Velvet Sundown has skyrocketed in listenership on Spotify, recently surpassing one million monthly listeners on the platform. The band’s sound resembles 70s rock and folk, and has been added to popular playlists –– much to the chagrin of artists already struggling with meagre streaming revenues.

In Hollywood, Time covered the video generation start-up Moonvalley. The company owns AI film studio Asteria, which was co-founded this year by filmmaker and actress Natasha Lyonne and filmmaker Bryn Mooser. Asteria has been advising Moonvalley on the development of Marey, an AI model now available to filmmakers for subscription tiers starting at $14.99 a month.

But what does AI actually mean for the future of entertainment? That is the question I tried to answer in an essay earlier this month, which argues that large models will make entertainment both much better and much worse depending on how you react to the technology.

And to wrap up, some wildcards worthy of your time.

This lecture on AI and economic growth from Chad Jones, running through what some growth models look like for a world with powerful AI.

A cool new paper that asked: “To what extent (if any) can the concept of introspection be meaningfully applied to LLMs?” The authors argue that, in a scenario where an LLM correctly infers the value of its own temperature parameter, we should legitimately consider it a minimal example of introspection.

Google DeepMind said it made a major breakthrough in hurricane forecasting, introducing a system that can predict the path and intensity of tropical cyclones with accuracy.

X’s Grok went loco and was taken offline by engineers, in an episode that reminded me of the Microsoft Tay chatbot debacle from a few years back. Also, Grok sometimes thinks it’s Elon Musk.

Anthropic made Claude run a vending machine business, which did not turn out to be particularly profitable. Better luck next time, Claude.

That’s the whistle for the end of edition two. Let us know what you think, and what we should look at in future outings.

rule30 is a quantitative venture capital fund. We've dedicated three years to developing groundbreaking AI and machine learning algorithms and systematic strategies to re-engineer the economics of startup investing.